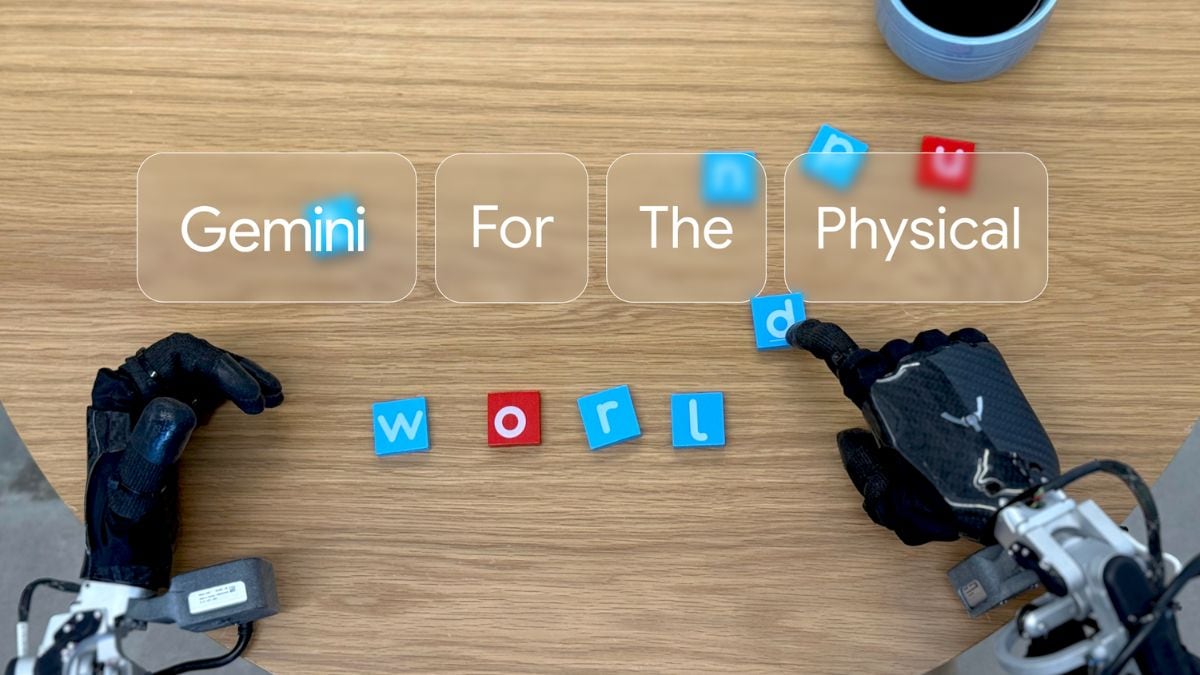

Google DeepMind unveiled two new synthetic intelligence (AI) fashions on Thursday, which might management robots to make them carry out a variety of duties in real-world environments. Dubbed Gemini Robotics and Gemini Robotics-ER (embodied reasoning), these are superior imaginative and prescient language fashions able to displaying spatial intelligence and performing actions. The Mountain View-based tech large additionally revealed that it’s partnering with Apptronik to construct Gemini 2.0-powered humanoid robots. The corporate can also be testing these fashions to judge them additional, and perceive easy methods to make them higher.

Google DeepMind Unveils Gemini Robotics AI Fashions

In a weblog publish, DeepMind detailed the brand new AI fashions for robots. Carolina Parada, the Senior Director and Head of Robotics at Google DeepMind, stated that for AI to be useful to folks within the bodily world, they must show “embodied” reasoning — the power to work together and perceive the bodily world and carry out actions to finish duties.

Gemini Robotics, the primary of the 2 AI fashions, is a sophisticated vision-language-action (VLA) mannequin which was constructed utilizing the Gemini 2.0 mannequin. It has a brand new output modality of “bodily actions” which permits the mannequin to immediately management robots.

DeepMind highlighted that to be helpful within the bodily world, AI fashions for robotics require three key capabilities — generality, interactivity, and dexterity. Generality refers to a mannequin’s capacity to adapt to totally different conditions. Gemini Robotics is “adept at coping with new objects, various directions, and new environments,” claimed the corporate. Based mostly on inside testing, the researchers discovered the AI mannequin greater than doubles the efficiency on a complete generalisation benchmark.

The AI mannequin’s interactivity is constructed on the muse of Gemini 2.0, and it will possibly perceive and reply to instructions phrased in on a regular basis, conversational language and totally different languages. Google claimed that the mannequin additionally repeatedly screens its environment, detects adjustments to the surroundings or directions, and adjusts its actions primarily based on the enter.

Lastly, DeepMind claimed that Gemini Robotics can carry out extraordinarily complicated, multi-step duties that require exact manipulation of the bodily surroundings. The researchers stated the AI mannequin can management robots to fold a chunk of paper or pack a snack right into a bag.

The second AI mannequin, Gemini Robotics-ER, can also be a imaginative and prescient language mannequin but it surely focuses on spatial reasoning. Drawing from Gemini 2.0’s coding and 3D detection, the AI mannequin is claimed to show the power to know the best strikes to govern an object in the true world. Highlighting an instance, Parada stated when the mannequin was proven a espresso mug, it was capable of generate a command for a two-finger grasp to choose it up by the deal with alongside a secure trajectory.

The AI mannequin performs a lot of steps needed to regulate a robotic within the bodily world, together with notion, state estimation, spatial understanding, planning, and code technology. Notably, neither of the 2 AI fashions is at present accessible within the public area. DeepMind will seemingly first combine the AI mannequin right into a humanoid robotic and consider its capabilities, earlier than releasing the expertise.